Technical issues and methodological challenges of field engineering for research

Recording a polyartefacted seminar

Version

française >

Justine Lascar, Oriane Dujour, « Technical issues and

methodological challenges of field engineering for

research », Interactions and Screens in Research and

Education (enhanced edition), Les Ateliers de [sens

public], Montreal, 2023, isbn:978-2-924925-25-6, http://ateliers.sens-public.org/interactions-and-screens-in-research-and-education/appendix.html.

version:0, 11/15/2023

Creative

Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0)

The production and processing of corpora do not only raise methodological questions but also imply a reflection on the articulation between the work of collecting data and the requirements of the analysis. In the field of linguistic interaction analysis, this translates in particular into attention to the linguistic and multimodal details produced, mobilised, interpreted by the participants and made available by adequate recording, transcription and analysis techniques. In other words, the requirement of continuous accessibility of the relevant details of the interactions governs all the stages of the constitution and analysis of the corpora: from data collection in the field to the “manufacturing” phase, which includes audio-visual editing, transcription, alignment, annotation, up to the actual analysis phase.

These different stages in the processing of the “Digital Presences“Digital Presences” Corpus available on Ortolang.↩︎” corpus are described here by considering the overlapping of numerous aspects: technical, methodological, theoretical and legal.

Recording devices

The first step in analysing interactions is the collection of situational data. Far from being a preliminary, secondary and marginal stage that could be conceived independently from the analytical objectives, data collection is an integral part of the overall process of analysis.

Collecting data is not a one-off, purely technical stage, but an undertaking that involves knowledge of the field and the collectors’ relationships with the various actors involved, and the practical and technical dimensions of recording.

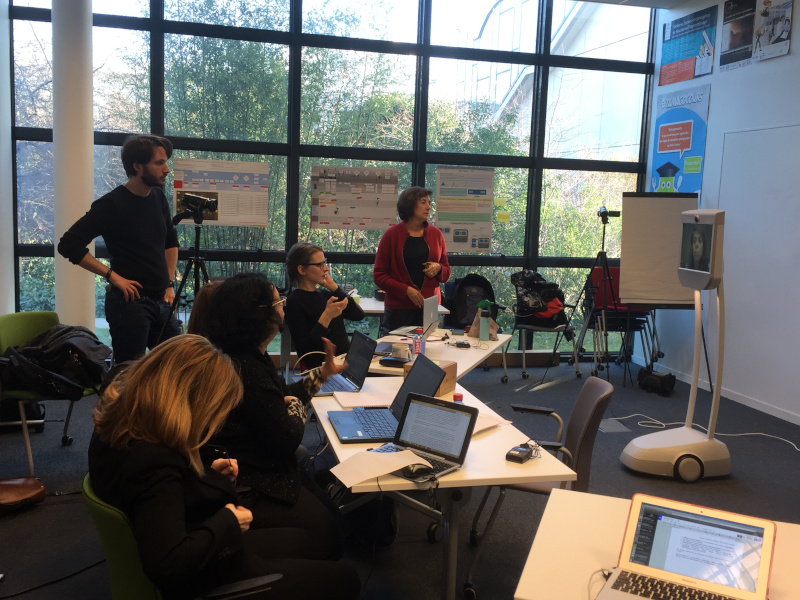

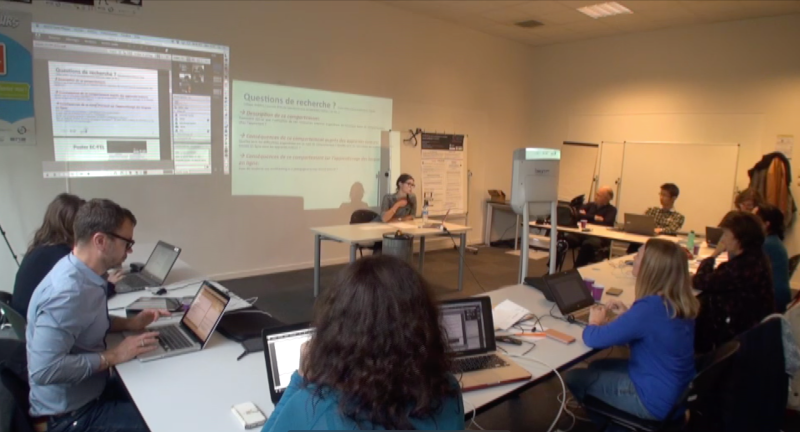

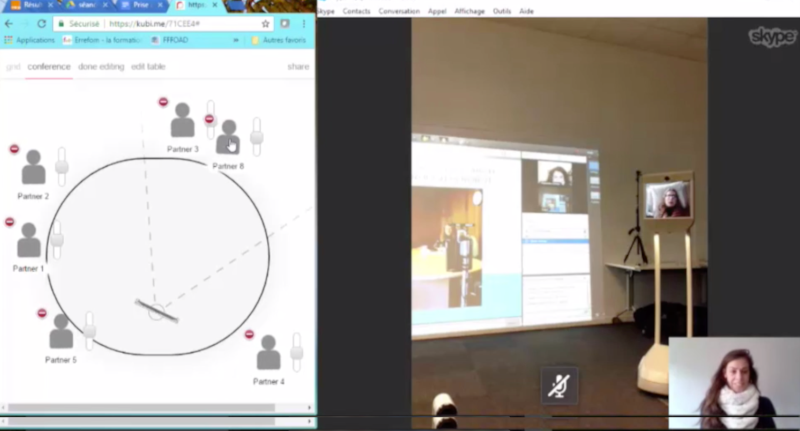

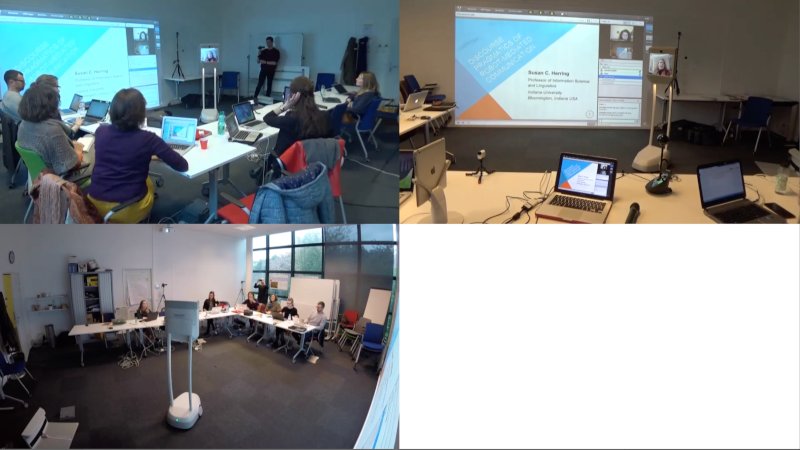

In the tradition of recording methods used in Conversation Analysis and educational sciences, we recorded the IMPEC seminars using several cameras in order to multiply the points of view. This allowed us to preserve the ecology of the situation and to have access to all the details of the interactions such as gaze, gestures, postures but also to all the online communication through screen captures taken by the different artefacts used during the seminar (Adobe Connect, Kubi and Beam telepresence robots). This research project started when we had just acquired new recording devices, in particular action cameras such as the GoPro and the Sony Action Cam, but also Kodak SP360 360° cameras. These cameras could not only be set up in places that were previously inaccessible to conventional tripod cameras, but could also take more wide-angle shots with their integrated wide-angle lens. The Digital Presences research project thus provided the opportunity to test these different devices and to reflect on their specificities and their contribution to the analysis of interactions.

The members of the “Digital Presences” group opened the doors of the seminar to us also by allowing us to test different device configurations. The Laboratory of Pedagogical and Digital Innovation Room (LipeN) is a space designed to accommodate collaborative methodological tools, and is located within the French Institute of Education (IFE) at the ENS of Lyon. The data collection had to take into account the constraints linked to the location and to the different modalities of the seminar.

The location:

- The room has a glass wall which caused problems in the arrangement of the cameras to avoid backlighting,

- one of the walls is covered with a whiteboard which constrained where we could place the cameras,

- the furniture is modular and there are sockets on the floor. We had to take this into account for the trajectories of the Beam robot in particular.

Organisation of the seminar:

- there were several configurations: conference mode, with the speaker(s) in the centre facing the audience in a U-shape; work-group mode, with participants seated around two L-shaped tables; and once, conference mode where the speaker piloted the Beam robot with the audience facing her around an L-shaped table. For each of these configurations, we needed a specific recording device.

Video recording

Recording corpora is a material and technical operation that must be designed and carried out according to the objectives and objects of analysis. This operation aimed at recording audio/video data in order to make relevant linguistic, multimodal and situational details (gaze, gestures, movements, actions, objects, physical setting) available, and therefore analysable. We sought to record both the details that participants exploited in a situated way to produce and interpret the intelligibility of their behaviour, and those that the analysts exploited to give an account of the organisation of the interaction, based on the orientations shown by the participants.

The recordings were therefore governed by the need to take into account

- the temporal unfolding of the interaction;

- the ecology of the interaction, i.e., the way it unfolded in space;

- the framework of participation that characterised the interaction;

- the objects that were mobilised by the interactants.

To achieve these objectives, we used a variety of recording equipment. Firstly, we used two Sony XR550 camcorders on tripods to provide two views of the seminar:

- the first camera was aimed at the audience – View 1.

- the second was placed at the back of the room facing the speakers and the video content projected on the wall (Adobe Connect and presentation slideshow) – in the group work configuration, the two views were complementary – View 2.

A GoPro Hero5 camera was positioned high up a wall on a whiteboard using a magnetic gorilla pod to record an overview of the room and the movements of the Beam robot – GP view.

We set up these three views for all the seminars we recorded.

During the first session, we also tested a Kodak SP360 camera, positioned on a piece of furniture so it could be level with the interactants’ faces, which interfered with the movements of the Beam robot. Moreover, the analysis of the 360° data is complex because it involves constructing a point of view a posteriori. We therefore abandoned this solution for the following sessions.

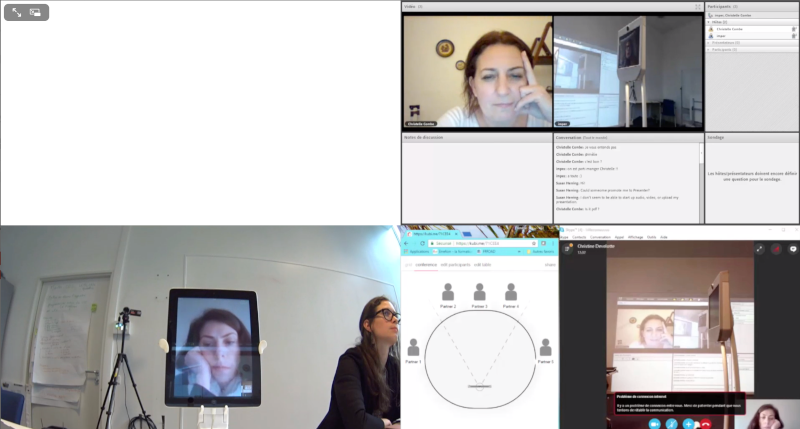

To record data involving the remote participants (different individuals were present in each session), we had to take into account which digital artefacts were being used and decide how to collect their data.

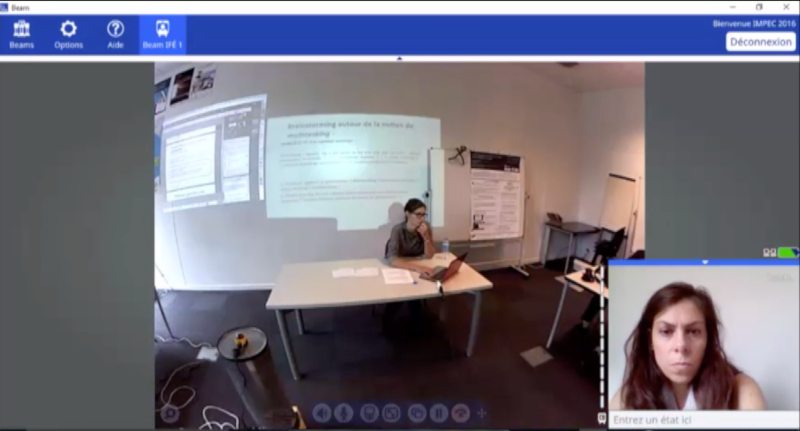

For the Beam, we asked the pilot to record her screen and a view of her environment showing her interacting in front of her screen.

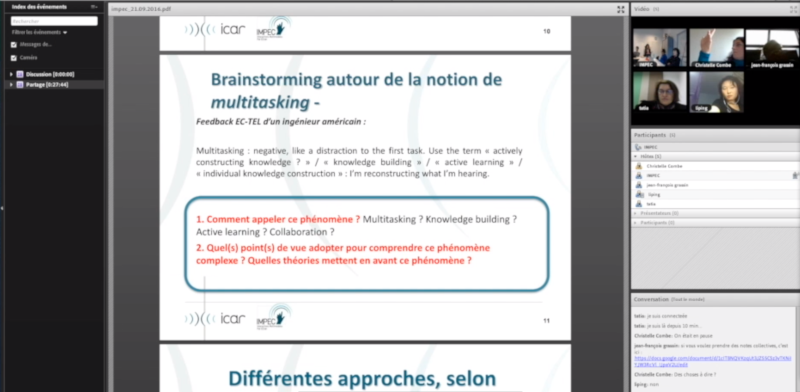

In order to record the remote use of Adobe Connect, we also asked one of our colleagues in Aix-en-Provence to record her screen.

For Adobe Connect used in-situ in Lyon, we also collected a screen capture from a participant present in the LipeN room as well as the projection of the interface on the wall of the room using the View 1 Camera.

The Kubi stream was impossible to retrieve directly from the iPad, as the combination of the remote control and the dynamic screen capture made the connection lag; we therefore retrieved the movements of the Kubi and the image from the iPad using a Sony Action Cam placed a few centimetres away from the artefact using its built-in wide angle. To complete the picture, the screen of the computer that controlled the Kubi remotely was also recorded.

We therefore obtained between 5 and 8 different video streams for each session, – between 3 and 4 in-situ views and between 2 and 4 dynamic remote views and screen captures.

Audio recording

We used wireless Sennheiser Ew100 HF microphones connected to a Zoom H6 multitrack recorder to record the sound of the seminars. One microphone was worn by Christine one by the speaker and two others with foam insulation were placed in the room on tables close to the participants.

The multitrack recorder allows the sound to be monitored outside the room and the synchronised tracks to be recovered.

For each session, we therefore had four separate audio streams.

Video editing and export

The editing phase is also crucial in making all the elements of the interaction available and intelligible. The Cellule Corpus Complexes, a transversal research support team at the ICAR laboratory, uses its expertise in post-production data processing (synchronisation of the different sources, anonymisation, audio and video editing, etc.).

We work with Final Cut Pro X on Mac computers. After having created a library that gathered all the corpus data serving as an archive, we imported all the recorded and recovered streams. The resolution, bit rates and number of frames per second could differ depending on the source. We listed and analysed them to prevent possible processing problems. The first important step was to synchronise the different audio and video streams available for each seminar (between 8 and 12 tracks). While some of the synchronisation could be automated based on the soundtracks of the different streams, some was done manually.

The process went as follows: once the synchronisation was complete, we decided on a common start and end time for all the audio and video tracks; each view was thus exported as a single file but with the same duration as all the others. The video files were exported in .mp4 format with a resolution of 960 × 540, resulting in files that were not too large but of sufficient quality to provide access to the details of the interaction (the files had an average duration of one hour, and a size of about 1.2 GB each). The audio tracks were re-exported in .wav format, so as not to lose any information.

Once the files were exported, it was easy to navigate from one to the other due to their shared timing.

We then created multi-view edits using QuickTime Pro 7 software according to the team’s needs.

In this way, we discussed which views to focus on and how to arrange them. In the present case, several multi-view edits were made, for example, only with the tracks filmed in-situ in Lyon

with these same tracks and the Adobe Connect screen capture

or only with the digital artefacts being used.

In addition to the seminar sessions, the “Digital Presences” corpus contains 17 video and audio interviews of the different team members. They were conducted after the seminars together with a questionnaire. The interviews were also transcribed.

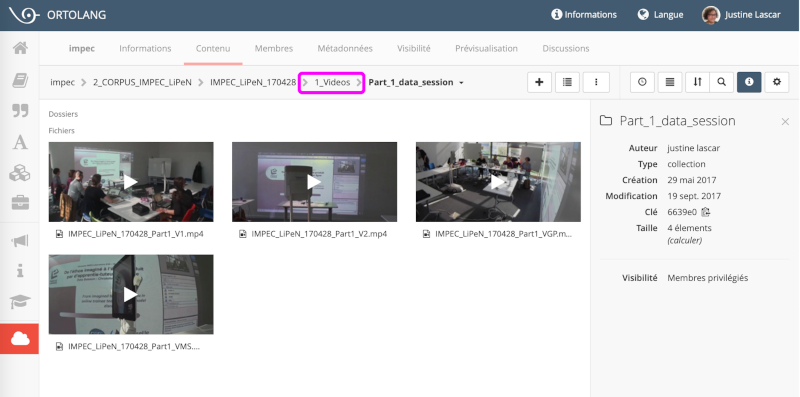

All the audio and video tracks of the seminars, the multi-scope montages as well as the interviews were made available to the whole team via the Ortolang platform (EquipEx of the TGIR Humanum). The corpus is structured and archived so as to be accessible to the members of the group in a secure manner.

Video editing choices made for a video-enhanced publication

Synopsis – collaborative work – method

The choice to publish the book in an online digital format gave the authors greater freedom to illustrate their concepts, particularly through the possibility of integrating videos into the text. The creation of the video clips aimed to add a layer of analysis through the editing processes, in addition to the raw data from the seminars.

To facilitate comprehension and avoid interrupting the flow of the article, we chose to create very short clips (less than 3 minutes) based on the model of 1 clip = 1 concept.

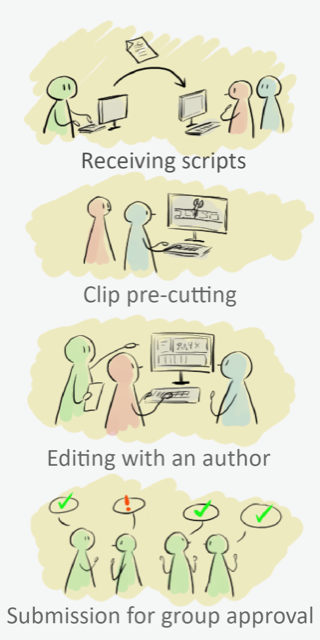

The clips were created in close collaboration with the authors of the book in order to preserve their intention as much as possible. Each example combines technique and analysis. The authors provided us with a list of the videos selected for study, their location in the articles and the extracts and views necessary for their creation. They then produced a written synopsis for each video clip, bringing together the various audio-visual materials and additional elements (transcription, subtitling, focus, commentary, etc.).

Following this framework, we created an initial sequence with the different shots and timings requested and ensured that the different views were synchronised in order to avoid time lags.

We then repeated this editing test in the presence of a one of the authors of the chapter or by video conference when necessaryThe digital editing of the book, which began in 2019, was completed during the lockdown, so some of the clips were produced remotely.↩︎. The process is the result of a dialogue between the researcher and the members of the Cellule Corpus Complexes technical team about the technical proposals. Audio-visual editing tools were, in fact, used to interpret the discourse of the researcher.

The status of the video changes from raw data to a product resulting from the analysis of this data. Our aim was to reveal the researcher’s point of view, i.e., to show their analysis through editing.

The video clips are treated as inseparable from the analysis presented in the articles, rather than as independent objects. Therefore, we have chosen not to contextualise the extract by detailing the subject matter in the video, since these elements are available in the associated article; this avoids a visual information overload and considerably shortens the length of the clip, so as not to be redundant with the written analysis.

Focusing attention through editing

To illustrate the authors’ concepts, we used editing techniques to highlight specific elements of the corpus of data. For example, we used these different techniques to focus the reader’s attention on a part of a scene:

- Progressive zoom: this allows an element to be enlarged, more or less quickly depending on the rhythm of the extract, either to completely cover the shot underneath or to have a top layer in order to show several elements at once. We used it in particular to show shots from within the artefact from which they were taken (e.g., zooming in on the view of the Kubi screen and making the enlarged picture emerge from that same screen). From an analytical point of view, zooming allows us to convey focus.

- Crop: the shot is cropped, usually after being enlarged, to remove unnecessary elements from the image. Cropping can be done either by showing the original frame and the frame change or by showing only the cropped shot.

- Blurring: when layers are superimposed, for example after progressive zooming in, blurring the lower layer makes it less visible and gives the reader less visual information. We used this technique when the focus needed to be on the Adobe Connect chat window.

- Circles: more simply, we often used turquoise circles to draw the viewer’s attention to a specific element or to link a descriptive note to the element involved.

Similarly, depending on the context, we selected the audio tracks to be used and manipulated in order to focus on the exchanges, or conversely, to reduce the sound information.

Adjusting the rhythm of the video clips

We paced the clips so that they remained short, and so the reader was not cut off from the text for too long.

In general, we mainly used cuts to change shots but, in some cases, we used the zoom to indicate which interface the new shot came from. When it was important to show several views simultaneously (for example, to show the same action from two different angles), we used a split screen, i.e., dividing the screen into two or more parts, each filled by a different shot.

In cases where the analysis involved a long extract or even a complete session, we used two procedures:

- Acceleration: an 8-fold or more increase in the speed of the video.

- A fade to black between two shots: the first shot darkens until the screen is completely black and then the second shot gradually appears to indicate a time jump.

Conveying a clear analysis

The clarity of the situations depended in particular on a visual contextualisation of the superimposed shots. This was achieved by the technique, mentioned above, of zooming in from the artefact, but also by applying filters to the shots. For example, in order to indicate the sequences that were accelerated, we applied a visual effect (Frame in Final Cut Pro) that streaked the image with thin green stripes, and added a double animated arrow, similar to the Fast Forward symbol on VCRs.

As another example, for the shots from one of the recording cameras, we used the cam recorder filter, which applies a frame and a symbol indicating the current recording.

In order to clarify certain passages in the video or to convey a specific point of the researcher’s analysis, we also used text boxes:

- Text in white Courier font on a dark grey band: used for transcriptions of spoken words when these are important for the analysis.

- Text in black Comfortaa font on a turquoise band: used to describe or contextualise an action, and to propose an analysis.

- Text in black Comfortaa font in a turquoise bubble: used for messages posted in the chat window. The shape and animation of the bubble are reminiscent of instant messengers.

It was often difficult to find a good compromise between the clarity and briefness of the textual explanations, which had to be easy to read and avoid disrupting attention too much when viewing the clip. The authors chose the sentences to use in the edited video, and the rewordings were chosen together with the technical team.

Export and archiving

A major issue was to find a secure, permanent storage place that would allow the videos to be broadcasted on the publisher’s website. We chose to host them on the ATV (Archiving and Transcoding Video) platform created and managed by the ENS, where we archived the clips in .mp4 format after indexing them. ATV also allows users to upload enriched videos, for example, with a subtitle file.

Collaborating with the different authors of this book from various disciplinary fields has been a truly enriching experience. The members of the Cellule Corpus Complexes were part and parcel of the research team throughout the phases of the project, from data collection to the publication of the book. Our thoughts and experiences nourished each other. This five-year collaborative project has allowed us to explore, test and experiment with our working methods, and to enrich them with different disciplinary perspectives, always with mutual benevolence.